Introduction:

This

semester’s last exercise will be based on digitization of real world features

with a handheld GPS unit. For it, we will be returning to the Priory and dividing

into groups of three that will navigate the land in order to collect field data

from three different categories of information.

This is the same property that has been traversed by the class four

times over the course of the semester, and from experience we know that it has

a number of trails crisscrossing well over one hundred acres of wooded

hills.  |

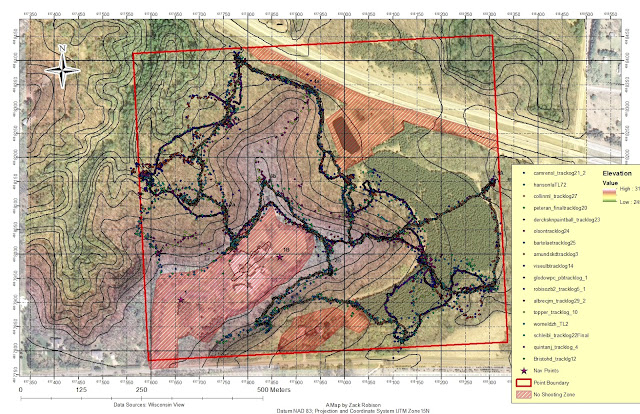

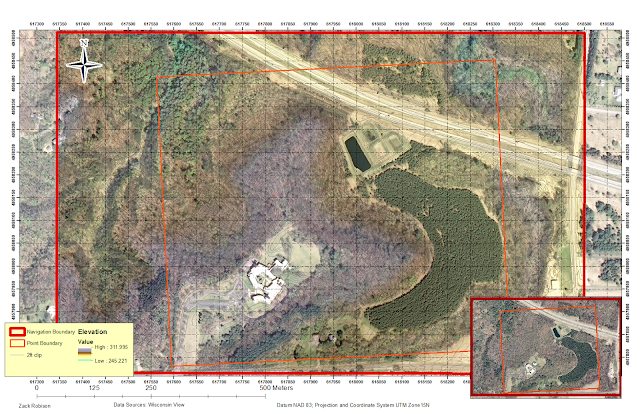

| Figure 1: The Priory |

| fig 2: Creating a Domain |

| fig 3: Setting a domain in a feature class |

After developing these domains, the ArcPad Data Manager Extension was used to import this geodatabase to the chosen GPS unit. In addition to returning to the Priory for this project, the final installment of our field methods class includes the return of the Trimble Juno handheld GPS unit (fig 4) which was what the database was uploaded to before heading into the field. This process was simplified by the “Get Data for Arc Pad” wizard, activated by clicking the “Get Data for Arc Pad” button on the ArcPad toolbar (fig 5). This program helps guide a user through selection of which data to export to the Juno, along with photograph options for feature classes and output options and extraction criteria.

|

| fig 4: the JUNO |

Data

collection was a fairly straight forward process: our group simply set out a path which trekked

through the priory following a major foot path and digitized the locations of

our selected features as we encountered them.

Being that none of the selected features were continuous, each

individual group member decided to collect each feature in the event of

problems arising with the data collected by one (or two) of the group members.

| figure 5: the ArcPad toolbar First button with arrow is to deploy to a GPS, button with left arrow is to import from |

Finally, once satisfied with the

data collected it was returned to the GIS and prepared into a map that

displayed the data collected by feature.

The importing was also done through a wizard accessed from the tool bar

called “Get Data from ArcPad” (fig 3). Esri doesn’t fool around with the names for

their tools, which is nice because it makes them easier to use. After completing the steps outlined by this

program (which merely consist of selecting the data to upload onto the

computer), the data was successfully imported and manipulated into a useable

map.

|

| fig 6: the Final Product |

The first takeaway that I had from

this exercise, and the first bit of advice that I have for any trying to recreate

it, is to know your technology. Twice the technology failed my group in this exercise,

first in my attempts to upload my database to a GPS unit that was known to be

faulty (unbeknownst to myself), and again when my team member Joey was mysteriously

unable to acquire a satellite fix with his Juno unit. Countless minutes of my life were washed away

in a futile effort to do something that would not ultimately be done, and an

opportunity to gather data was similarly wasted by the technology failing to

work. I could not help but appreciate the

irony that the final project done in this class harkened back to one of the

very first lessons imparted on us by professor Hupy: don’t trust technology, if

it can fail, it will and often at the worst possible times. Fortunately, Brandon and I had also been

collecting data that would otherwise have been Joey’s domain, and the group

successfully completed the task of mapping three different features at the Priory.

Another takeaway would be that the

handheld GPS is not always the most accurate data collection unit, especially

when the sky is obscured by trees. While

much of the data collected by Brandon and myself matched closely, some simply

did not and this combined with the earlier paragraph is a lesson in the

limitation of GPS data and a reminder about the importance of knowing how much

you are willing to trust the data given to you.

.fw.png)

.png)