Professor Hupy designed this lab to both further our understanding of field methods and (of course) improve out ability to provide low-tech solutions when "in a pinch" high-tech plans fall through. In it, we will use the distance-azimuth method of surveying to plot points of interest (of our choosing) in a quarter hectare (25 sq. meters, also of our choosing). In teams of two, we will be using two methods to survey our points; both sets of data collected will be distance-azimuth however they will be collected using two sets of instruments. The first data set is the combination of a compass and a radio-based range finder which is standing in for the rather unwieldy, if sometimes necessary, tape measure. The second data set will be collected with a laser rangefinder.

|

| fig. 1 We chose the worst time of day to go outside. courtesy wunderground. |

|

| What we felt we were doing |

|

| What we actually were doing. Say hi to Joey, he's the one in the middle. |

After collecting our data we will import both sets into a GIS and overlay the data sets with a base aerial of the area covered to assess the success or failure of our endeavor. Ideally, the points surveyed with both systems will line up accurately with the aerial. We selected the backyard area of a residence hall on campus (the area bordering both Horan and Governor's, for those curious) which seemed a good choice because of the myriad trees in the region, sprinkled with a smattering of other objects such as benches and disc golf holes to map.

Methodology:

So distance-azimuth surveys are quite simple. The surveyor stands on a known point, and calculates the distance and the angle to the desired survey points. Then, inside, the surveyor can use simple geometery to determine the location of the survey points using their relationship to the known point. Therefore, it is integral that an accurate calculation of angle can be found; the budding surveyor must have a working understanding of magnetic declination. Because of variations in the earth's molten metal core (of which I have only the most rudimentary understanding), magnetic north constantly shifts around true north. As magnetic compasses point toward this magnetic north, and as good geographers we want our data to be accurate against the standard true north (pole), we must calculate the angular differential between true north and magnetic north from our location on the earth.

|

| fig 4 Magnetic Declination, courtesy NOAA. |

Luckily for we Wisconsinite compass users, this declination is rather small in the area (see fig 4 above, the line of no declination ran just west of us in 2010). The exact declination for any loaction at any time on earth is conveniently provided free to the public by the wonderful folks at NOAA's geophysical data center with this magnificent declination calculator. The declination at the time of our data collection was determined to be 58' W, which means that for true accuracy in collecting our azimuth the laser rangefinder must be calibrated and the compass data must be rotated that far west.

Understanding this, we calibrated our tools and set out to collect our data. One team member would walk to each individual point with one radio reciever/transmitter and another would stand at the reference point with the other radio transmitter, which would use the radio waves to determine distance between the two points. The individual at the reference point with this transmitter would also use a compass to determine the azimuth to the point being surveyed. Another person would use a TruPulse 360 Laser Rangefinder (fig 5) to simultaneously determine both azimuth and distance to the survey point, while the fourth person took down the data.

|

| fig 5 TruPulse "Laser" rangefinders |

|

| fig 6 How the Laser Range Finders will look in the future. |

Unfortunately, we found a few issues with our selected hectare which will be discussed later, but eventually 32 unique data points were collected using both methods. These points were then turned into an excel file (fig 7). A reference point was determined by using georeferenced aerial imagery and added to this file, which was then imported to our ArcInfo geodatabase. In our case, I decided to use decimal degrees because it can be used by the computer and while I can project the data later if needed, doing so now is not important for the purposes of this excersize. This reference point was determined to be -91.50696 decimal degrees East, 44.79873 decimal degrees North. Also, with each point we collected attribute data to describe what point we were collecting, for example if a point was a tree or a post or table etc, so that we can more effectively match our data to an aerial later.

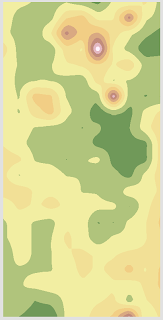

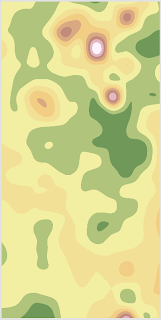

The tables were run through the Bearing Distance to Line tool, and then the Feature Vertices to Line tool provided with the GIS (under Data Management, then Features). A nice data flow model (fig 7) for this process can be found on stackexchange, along with an explanation of the process. Finally an unprojected aerial Basemap was added so that we could compare the accuracy of our data to a previouisly georeferenced image. The final product is figure 9 below, I digitized some goal points for reference. Compass and radio points are in blue, data collected by laser is in red. Unfortunately, the data do not line up especially well. For example, point data with a more easterly azimuth were consistently farther off than other points, but more on that below.

|

| fig 8 Bearing Distance to Line with the end Points. |

|

| fig 9 The final product, with some reference points in purple. |

Discussion:

As you can see, not only did the data collected with the two methods not match with each other, they didn't match with the terrain. Several factors could be at play in this discrepancy: the most problematic is that some of our points lied behind other points (trees) and could not be surveyed from our reference point. Adding a second or third reference point would probably have alleviated this problem. Being cold and windy, and since two team members were operating without gloves, there was also a decent incentive not to collect the same data point more than needed, therefore a few discrepancies could be attributed to user error or quick work. Far more prevalent by the look of the map, the east half of the laser data have consistently more extreme azimuths. It is possible that the building that we stood in the shadow of interfered with the function of the magnetic equipment used, because obviously we didn't survey our points through the corner of Horan Hall.

Unfortunately, the spatial and temporal resolution of the image that we are comparing our data to is not very appropriate either. For starters, in winter those trees look a lot differently than they do in summertime. One of the points surveyed was an unusually large snow pile, which of course would look more different still in the summer. Some of the smaller features that we collected are also impossible to see, either because the resolution is too small or because they are covered by foilage. Our quarter hectare was also too small to collect as many points as we wanted: the goal was fifty but even if we could have included the objects hiding behind each other we still wouldn't have managed that many points of interest. And we cheated by both surveying outside of our hectare and by using fluffy points of interest, such as snow piles.

Altogether, I am still rather pleased with our end result. I am disappointed in the lack of accuracy, however our radar/compass data was consistently within a few meters of our goal points, which is surprising given the difficulty which the radar tool would occaisionally operate with. I would certainly have preferred to use a total station for this job. I would have have more confidence in the preciseness and the reliability of my data, plus I could remove the step of trying to locate myself on an aerial photograph after having already collected my data. However, by comparison I have to admit there isn't much involved in this process: one handheld tool and a recording device are all that is required to survey the type of information that we were collecting. I like to travel light.